Goals

- Create a full-text search service on top of Firestore.

- Do not break too much sweat.

- Cheap to run on a small scale.

- Scalable and flexible.

Motivation

Users expect to have Google like search in their apps these days. For example, you may want to search for recipes containing a certain word or ingredient. Firestore doesn’t support native indexing or search for text fields in documents. Additionally, downloading all recipes only to show a few in the app is not practical and does not scale.

Topics covered

- Develop HTTP service with Python and FastAPI

- Run HTTP service with Google Cloud Run

- Test HTTP service (unit, component, system testing)

- Create HTTP API Gateway with NGINX for testing and Google Cloud Endpoints for deployment

- Handle Firebase Firestore events with Cloud Functions

- Integrate with Algolia for full-text search

- Update Algolia search index

- Deploy Cloud Function, Cloud Run, Cloud Endpoints with Terraform

- Test Firestore event handler with Firestore emulator.

- Run and test everything locally with docker-compose.

Prerequisite

An app where users can create, update or delete recipes. Recipes are stored in Firebase Firestore run in Native mode - a popular choice because of offline support, realtime updates, multi-platform support.

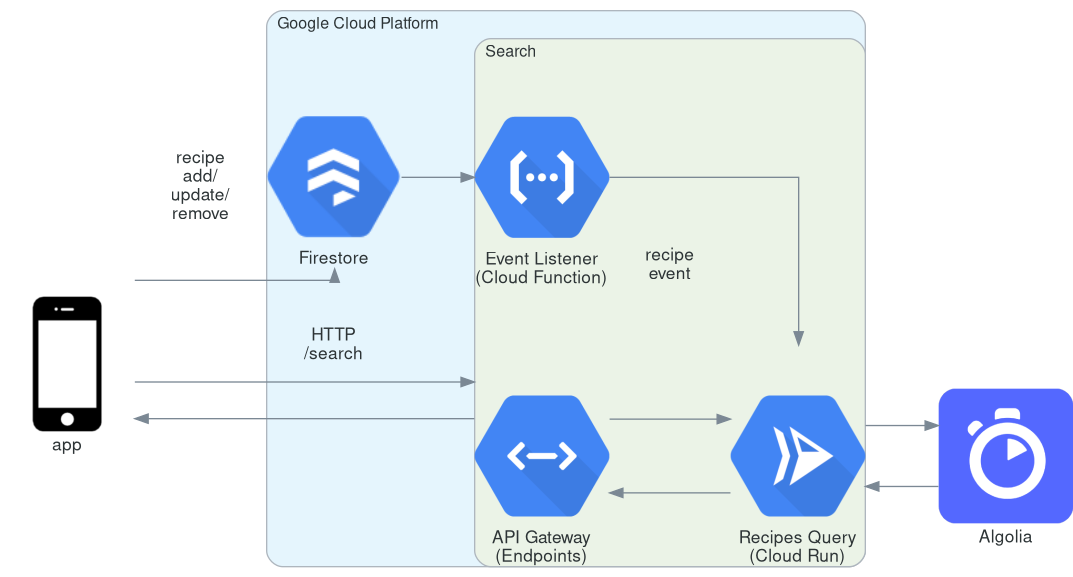

Architecture

Search service consists of three components:

- HTTP API gateway, based on Google Cloud Endpoints. The app uses it for recipes search.

- Recipes Query node, run on Cloud Run. Indexes recipes and handles search requests.

- Firestore Events Listener Cloud Function. Intercepts Firestore events (recipe add/update/delete) and passes them on to Recipes Query service.

Why do you need Events Listener? Why not listen to Firestore events in Recipes Query service? At the time of writing the Cloud Run does not support Firestore triggers.

Algolia, search as a service provider, is used to speed up a development. Recipes Query node is just a thin wrapper around Algolia’s API.

Why need for Recipes Query than?

- Keep the app decoupled from additional third party APIs,

- Easy swap to e.g. PostgreSQL, Elastic Search etc., and no app changes.

- Acceptable increased latency.

Search implementation details:

- Python

- FastAPI for API handling

- flake8 and black for code style and formatting

- docker-compose for local development and testing

- terraform for infrastructure as a code.

Recipes Query skeleton

It exposes POST /v1/recipes/query that is used to search for recipes.

Usage example:

curl -X POST \

-d '{ "params": "query=stew" }' \

"https://${RECIPE_QUERY_URL}/v1/recipes/query"

Initially just echoes back query value as a focus is on a service skeleton only for now:

{

"hits": "stew"

}

NGINX is used as an API Gateway placeholder for local testing. Later is replaced by Cloud Endpoints.

Recipes Query skeleton source code:

$ tree

.

├── docker-compose.yml

├── endpoints

│ └── nginx.conf

└── recipes

├── Dockerfile

├── requirements.txt

├── service

│ ├── api

│ │ ├── __init__.py

│ │ ├── ping.py

│ │ └── recipes.py

│ ├── config.py

│ ├── __init__.py

│ ├── main.py

│ └── schemas.py

└── tests

├── conftest.py

├── test_api_recipes.py

└── test_schemas.py

5 directories, 14 files

version: '3.8'

services:

recipes:

build: ./recipes

command: uvicorn service.main:app --reload --workers 1 --host 0.0.0.0 --port 8000

volumes:

- ./recipes/:/recipes

ports:

- 6758:8000

environment:

- ENVIRONMENT=dev

- TESTING=0

nginx:

image: nginx:1.19.8

ports:

- 8080:8080

volumes:

- ./endpoints/nginx.conf:/etc/nginx/conf.d/default.conf

depends_on:

- recipes

server {

listen 8080;

location /v1/recipes/ {

proxy_pass http://recipes:8000/;

}

}

env

.dockerignore

Dockerfile

*.pyc

*.pyo

*.pyd

__pycache__

[flake8]

max-line-length = 88

FROM gcr.io/nutimi-build/python:3.8.3-slim-buster

# set working directory

WORKDIR /recipes

# set environment varibles

ENV PYTHONDONTWRITEBYTECODE 1

ENV PYTHONUNBUFFERED 1

# install system dependencies

RUN apt-get update \

&& apt-get -y install netcat gcc \

&& apt-get clean

# install python dependencies

RUN pip install --upgrade pip

COPY ./requirements.txt .

RUN pip install -r requirements.txt

asyncpg==0.20.1

black==19.10b0

fastapi==0.58.1

flake8==3.8.3

gunicorn==20.0.4

isort==5.2.2

pytest-cov==2.10.1

pytest==5.4.2

requests==2.23.0

uvicorn==0.11.5

import os

import sys

sys.path.append(os.path.dirname(os.path.realpath(__file__)))

from fastapi import APIRouter, Depends

from service.config import Settings, get_settings

router = APIRouter()

@router.get("/ping")

async def pong(settings: Settings = Depends(get_settings)):

return {

"ping": "pong!",

"environment": settings.environment,

"testing": settings.testing,

}

from fastapi import APIRouter

from schemas import RecipesQueryInSchema, RecipesQueryOutSchema

router = APIRouter()

@router.post("/query", response_model=RecipesQueryOutSchema)

async def recipes_query(payload: RecipesQueryInSchema) -> RecipesQueryOutSchema:

return {"hits": payload.params}

"""Environment specific configuration."""

import logging

import os

from functools import lru_cache

from pydantic import BaseSettings

log = logging.getLogger(__name__)

class Settings(BaseSettings):

environment: str = os.getenv("ENVIRONMENT", "dev")

testing: bool = os.getenv("TESTING", 0)

@lru_cache()

def get_settings() -> BaseSettings:

"""Returns environment settings"""

log.info("Loading config settings from the environment...")

return Settings()

import logging

from fastapi import FastAPI

from api import ping, recipes

log = logging.getLogger(__name__)

def create_application() -> FastAPI:

application = FastAPI(root_path="/v1/recipes")

application.include_router(ping.router)

application.include_router(recipes.router, tags=["recipes"])

return application

app = create_application()

@app.on_event("startup")

async def startup_event():

log.info("Starting up...")

@app.on_event("shutdown")

async def shutdown_event():

log.info("Shutting down...")

from pydantic import BaseModel

class RecipesQueryInSchema(BaseModel):

params: str

class RecipesQueryOutSchema(BaseModel):

hits: str

import pytest

from starlette.testclient import TestClient

from service.main import create_application

@pytest.fixture(scope="module")

def test_app():

app = create_application()

with TestClient(app) as test_client:

yield test_client

import json

class TestRecipesQuery:

def test_query(self, test_app):

query = "hello"

result = test_app.post("/query", data=json.dumps({"params": query}))

assert result.status_code == 200

assert result.json()["hits"] == query

from service.schemas import (

RecipesQueryOutSchema

)

class TestQuerySchema:

def test_success(self):

schema = RecipesQueryOutSchema(**{"hits": "test"})

assert schema.hits == "test"

Build and run it:

$ docker-compose up -d --build

Check the status:

$ docker-compose ps

Name Command State Ports

-------------------------------------------------------------------------------------------------------

search-in-firestore_nginx_1 /docker-entrypoint.sh ngin ... Up 80/tcp, 0.0.0.0:8080->8080/tcp

search-in-firestore_recipes_1 uvicorn service.main:app - ... Up 0.0.0.0:6758->8000/tcp

Up and running so test it:

$ docker-compose exec recipes python -m pytest

============================== test session starts ==============================

platform linux -- Python 3.8.3, pytest-5.4.2, py-1.10.0, pluggy-0.13.1

rootdir: /recipes

plugins: cov-2.10.1

collected 2 items

tests/test_api_recipes.py . [ 50%]

tests/test_schemas.py . [100%]

=============================== 2 passed in 0.04s ===============================

Manual sanity checks with curl:

$ curl -w "\n" localhost:6758/ping

{"ping":"pong!","environment":"dev","testing":false}

$ curl -w "\n" -d '{"params": "hello"}' localhost:6758/query

{"hits":"hello"}

Similar but through API gateway (NGINX):

$ curl -w "\n" localhost:8080/v1/recipes/ping

{"ping":"pong!","environment":"dev","testing":false}

$ curl -w "\n" -d '{"params": "hello"}' localhost:8080/v1/recipes/query

{"hits":"hello"}

Firestore Event Listener skeleton

Purpose of that service is to listen to changes in Firestore and relay them to Recipe Query for recipes indexes update.

The simplest approach to intercept Firestore events is with Firestore triggers and Cloud Function.

Firestore Emulator and Functions Framework assist with testing the function locally. Let’s add Event Listener service placeholder and Firestore Emulator:

/docker-compose.ymlversion: '3.8'

services:

recipes:

build: ./recipes

command: uvicorn service.main:app --reload --workers 1 --host 0.0.0.0 --port 8000

volumes:

- ./recipes/:/recipes

ports:

- 6758:8000

environment:

- ENVIRONMENT=dev

- TESTING=0

firestore_emulator:

build:

context: ./events_listener/

dockerfile: Dockerfile-firestore-emulator

command: >

/bin/bash -c

"java -jar /root/.cache/firebase/emulators/cloud-firestore-emulator-*.jar \

--functions_emulator events_listener:8002 --host 0.0.0.0 --port 8001"

ports:

- 6759:8001

events_listener:

build:

context: ./events_listener/

dockerfile: Dockerfile-functions

command: >

functions-framework

--target=firestore_event

--signature-type=event

--port=8002

environment:

- FIRESTORE_EMULATOR_HOST=firestore_emulator:8001

depends_on:

- firestore_emulator

nginx:

image: nginx:1.19.8

ports:

- 8080:8080

volumes:

- ./endpoints/nginx.conf:/etc/nginx/conf.d/default.conf

depends_on:

- recipes

FROM openjdk:16-slim-buster

RUN apt-get update; apt-get install -y curl \

&& curl -sL https://deb.nodesource.com/setup_15.x | bash - \

&& apt-get install -y nodejs \

&& curl -L https://www.npmjs.com/install.sh | sh \

&& npm install -g firebase-tools \

&& firebase setup:emulators:firestore

FROM python:3.8.3-slim-buster

ARG PORT

ENV APP_HOME /app

WORKDIR $APP_HOME

ENV PYTHONUNBUFFERED TRUE

# Copy local code to the container image.

COPY . .

# Install production dependencies.

RUN pip install gunicorn functions-framework

RUN pip install -r requirements.txt

import json

def firestore_event(data, context):

"""Triggered by a change to a Firestore document.

Args:

data (dict): The event payload.

context (google.cloud.functions.Context): Metadata for the event.

"""

trigger_resource = context.resource

print("Function triggered by change to: %s" % trigger_resource)

print("\nOld value:")

print(json.dumps(data["oldValue"]))

print("\nNew value:")

print(json.dumps(data["value"]))

# nothing here yet

Structure of the project:

.

├── docker-compose.yml

├── endpoints

│ └── nginx.conf

├── events_listener

│ ├── Dockerfile-firestore-emulator

│ ├── Dockerfile-functions

│ ├── main.py

│ └── requirements.txt

└── recipes

├── Dockerfile

├── requirements.txt

├── service

│ ├── api

│ │ ├── __init__.py

│ │ ├── ping.py

│ │ └── recipes.py

│ ├── config.py

│ ├── __init__.py

│ ├── main.py

│ └── schemas.py

└── tests

├── conftest.py

├── test_api_recipes.py

└── test_schemas.py

6 directories, 18 files

Build and run it:

$ docker-compose up -d --build

Check the status:

$ docker-compose ps

Name Command State Ports

------------------------------------------------------------------------------------------------------------------

search-in-firestore_events_listener_1 functions-framework --targ ... Up

search-in-firestore_firestore_emulator_1 /bin/bash -c java -jar /ro ... Up 0.0.0.0:6759->8001/tcp

search-in-firestore_nginx_1 /docker-entrypoint.sh ngin ... Up 80/tcp, 0.0.0.0:8080->8080/tcp

search-in-firestore_recipes_1 uvicorn service.main:app - ... Up 0.0.0.0:6758->8000/tcp

Manual sanity checks with curl that Firestore Emulator runs:

$ curl localhost:6759

Ok

Summary

Post introduces project structure and basic building blocks that are used as a foundation of the next post where we start wiring them together.